8 min to read

Multi-region Deployment

Multi-region Serverless infrastructure

This will be a hands-on blog, on where we will be deploying a NodeJS Lambda Application on multiple AWS Regions.

Serverless

Is not a surprise to anyone that Serverless is here to stay, I will not explain why this is the case as there are plenty of posts out there explaining the benefits, the pros, and cons, and the revolution itself that this new approach of developing and running applications brings to the table. For someone that wants to have a better theoretical understanding of Serverless, I would recommend reading the following blog: Serverless 101

I was involved in a project that required the application to be available in all AWS Europe regions, including London, Ireland, Milan, Paris, Stockholm, and Frankfurt (6) because it needed to be fully available to requests coming from all over the continent, which is about 44 ones. It had also a setup to work with Geolocation services so requests coming from a given country were redirected to the nearest region using CloudFlare and Lamba Edge which is a feature of Amazon CloudFront that lets you run code closer to users of your application, which improves performance and reduces latency.

Having multiple regions was also needed because we needed to have multiple IPs for the applications so when doing requests to multiple third parties they wouldn’t be blacklisted, as the requests going from these IPs were expected to be around 10k petitions per hour. This so calls IPs were the ones from the API Gateways resources created, on where our application was also deployed.

As in every application you work on, a CI/CD process needs to be defined, fully automatic and without the need for human intervention in order to get the code from the developer’s computer into the production servers (in our case in the Lambda containers). That being said the CI/CD pipeline was defined using GitHub Actions, when code is pushed into the main branch, it will be built, tested, and then deployed into all six regions.

SAM To the rescue

AWS Serverless Application Model. “Build serverless applications in simple and clean syntax”.

The AWS Serverless Application Model (SAM) is an open-source framework for building serverless applications. It provides shorthand syntax to express functions, APIs, databases, and event source mappings. With just a few lines per resource, you can define the application you want and model it using YAML. During deployment, SAM transforms and expands the SAM syntax into AWS CloudFormation syntax, enabling you to build serverless applications faster.

SAM allows us to define templates on how we want the configuration and definitions on our functions, on the API Gateway, and even authentication, logging, and monitoring using AWS Resources, at the end, it just creates multiple CloudFormation stacks, the Infrastructure as Code solution from AWS.

Let’s get our hands dirty!

Disclaimer: I am not a Software developer so the example that I will show is pretty much something that you will do on an introductory course when playing around with NodeJS, that being said, the CI/CD involved is more complex and you can use it on any other project that needs this set of capabilities or automation.

All the code can be found in here: GitHub Repository

The application itself was generated using the all mighty command sam init which allows us to create pre-defined production-grade templates to run serverless architectures without any tears.

Our Rest API application will receive requests and return the Region on where it is running using default ENV variables that are injected in the build/deployment process.

Trying to make sense

- app.js

A simple REST application that reads the Lambda AWS environment variables to return the Region on where is running.

- .github/workflows/production.yml

The file on where all magic happens, on where all configurations for the deployment are set, and on where the only configurations needed are the GitHub secrets for the AWS Access Keys. As seen in the file, we just need the AWS_ACCESS_KEY_ID and AWS_SECRET_ACCESS_KEY secretes to be created in our repository.

We first configure the entire environment with the AWS Credentials and then we build the application running SAM commands, soon after we start to deploy to all 6 regions defined in the commands that are set in the deploy command using the toml files located in the regions folder.

- /regions/samconfig-REGION.toml

The AWS SAM CLI supports a project-level configuration file that stores default parameters for its commands this configuration file is in the TOML file format. The default file name is samconfig.toml, but in our case, we will be using multiple files for all regions, and we will store them in a folder called “regions”, soon after we will call these files when running the deploy command on the CI/CD Pipeline.

Deploying our app!

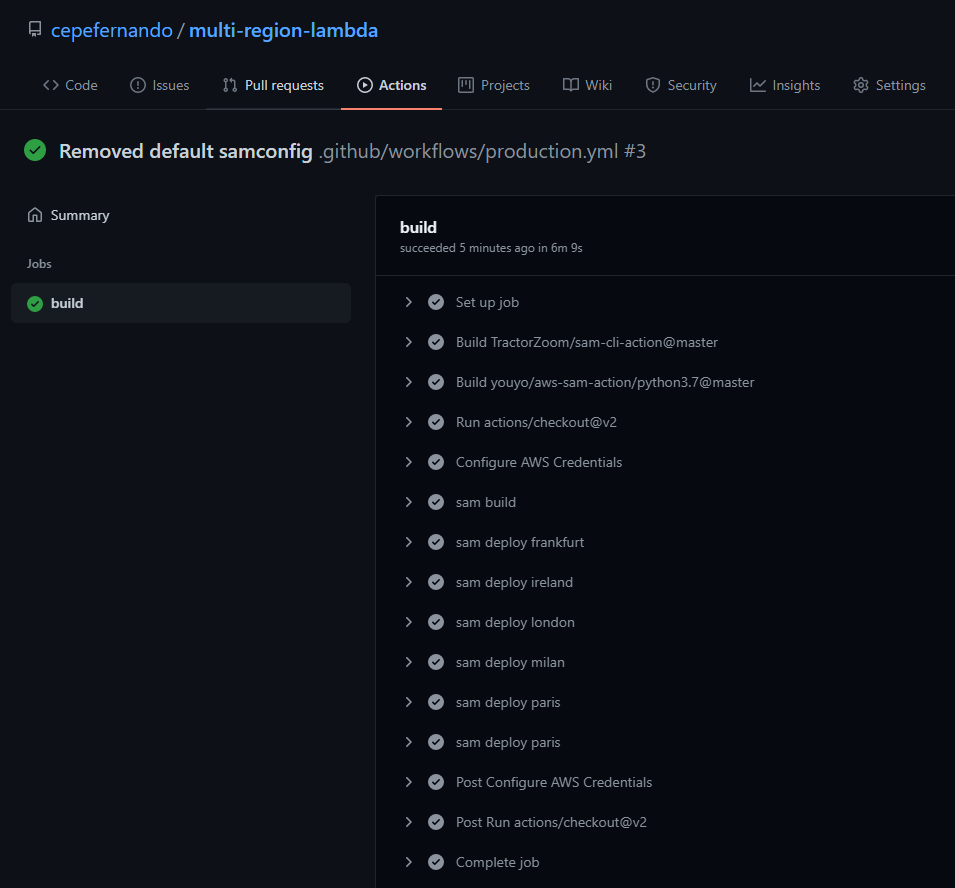

By simply doing a push to the master branch (default branch) GitHub actions will run the pipeline and deploy to all 6 regions. In the background, CloudFormation is doing all the work, updating all the Lambda Function’s code, and doing the initial configuration on the AWS API Gateway, on where our application will be exposed to the entire internet. As seen in the following picture, all the steps that have been defined in the .github/workflows/production.yml are shown and executed step by step using GitHub actions logic.

Accessing our Application

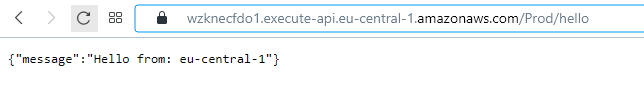

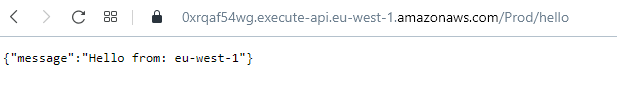

After the initial deployment, we could go to the API Gateway console on each region and see our Application’s endpoint. As defined in the template.ym file, in the outputs section, our final API Gateway endpoint will be reachable at:

[`https://$](https://%24/){ServerlessRestApi}.execute-api.${AWS::Region}.amazonaws.com/Prod/hello/`

As a result we will have:

- Frankfurt endpoint -> (eu-central-1)

- Ireland endpoint -> (eu-west-1)

- Milan endpoint -> (eu-south-1)

- Remaining 3 regions -> Try it yourself

With this in mind, we are ready to go to all regions and grab the endpoint itself and start playing with them (or provide the endpoints to the development team).

Costs

It wouldn’t be a complete blog nor a decent solution if I didn’t talk about the costs of having this application up and running in the cloud, as, in the end, all that matters when planning to work in the cloud are costs. That being said, In order to understand cost we must understand the technology itself and how the cloud business operation model works when working with serverless technologies and architectures, please follow up this next documentation on the solution we just used:

-

https://github.com/pricing (GitHub Actions)

There are already books about serverless costs, it’s something that truly needs to be understood very well in order to make recommendations and even try to plan a development strategy towards it, but I truly recommend the following blogs posts to get a better glimpse of this important topic:

- https://techbeacon.com/enterprise-it/economics-serverless-computing-real-world-test

- https://medium.com/serverless-transformation/is-serverless-cheaper-for-your-use-case-find-out-with-this-calculator-2f8a52fc6a68

Conclusion

By now we should have our application up and running on all six AWS Regions, leveraging the power of serverless and the automation from a fully functional CI/CD Pipeline using GitHub Actions, with these practices in mind you can now build anything you want and you will know that your application is spread all over the continent using multiple locations for high availability and some other Best Practices when running workloads in the cloud.

Build On!