15 min to read

Moving Between Clouds

The Journey into the Cloud.

This is mostly a theoretical blog about the journey into the cloud, about the process and my experiences moving workloads into different cloud providers working as a DevOps engineer, Cloud Architect, SRE, DBA, SysAdmin, Superman and as a firefighter.

Migrations

Over the past few years I have had the opportunity of conducting multiple “migrations into the cloud”, from on-premise vSphere systems, to a given cloud provider, from a cloud provider into a different cloud provider, from some old developers computer into a High Available Cloud Environment (not kidding!), and at the end having them landing in their new cloud, safe and sound.

Let’s first start defining what a migration is, in simple terms, a migration can be described as the “movement from one part of something to another”, and with this definition in mind we can now say that a cloud migration is simply the process of transferring data, application code, and other technology-related business processes from an on-premise or legacy infrastructure to a cloud environment/provider. But this doesn’t apply only to on-premise applications that are being moved into the cloud, this also applies from existing applications running in one cloud provider that will be moved into another cloud provider.

So you might be asking, if my entire application is already running on a cloud provider why should I migrate it into a different cloud provider? The answer is simple: Because upper management say so. Over the last migrations I have conducted that has been the default answer from the people in charge of conducting the project or the ones that hired me.

Interesting enough, I have found that most of the times this decision is taken mainly because the entire company is moving towards a defined cloud provider or they just want to standardize their cloud providers and in most cases the different branches work without any communication whatsoever with their fellow divisions, resulting in a mix of cloud providers (mainly AWS, Azure and GCP) and all running their applications with their own set of policies and methodologies. This might sound crazy but is what I have seen in small, middle and big enterprises all around the globe.

Apparently so, when the first migration was conducted into the cloud, the decision makers did what they thought was the “right cloud” or the “right architecture”, but several years later, and some thousand of dollars expend, they find out that they were wrong and that the requirements back then were not done correctly; or just because back in the days (~5 years ago) Kubernetes did not exist. In some cases is also because the Cloud Representatives have done a better job selling their services than the competition.

The Journey to the cloud

Perfectly explained in the image from turnoff.us, the journey is quite different from legacy applications than applications that are born directly in the cloud. Talking about this voyage to the cloud might sound scary and totally overwhelming, and it does because it is a complex process, on where a lot of moving pieces (and people) need to be on the same page on several topics, and the business itself should be convinced of the benefits that the cloud model brings to the table.

Once everyone is on board its just a matter of following some guidelines in order to achieve a successful migration to the cloud, and if that were true I would be jobless by now. Taking the joke aside, in order to achieve the goal of a successful migration to the cloud there are a some common approaches to this, and it will fully depend on the application and the business logic as well. Shift and Lift, Refactoring, Re-engineering and Replace are the most used methods when moving to the cloud.

The initial Plan.

The understanding of the entire systems and services is KEY to a successful cloud migration, by doing an entire inventory of the assets and the moving parts of an application the process to move it into the is a no brainer, over the last migrations I have conducted I have found that this should be the main focus of the engineers and the team members in charge of architecture and planning for the migration.

Having all moving parts from an application narrowed down makes all things easier, take for example an application running on a Virtual Machine on vSphere, which is a web server on Ngnix serving mostly static sites, an API running on Flask (Phyton) and a Postgres Database instance running on some old cluster on a different set of virtual machines. Doing the math, we will have three moving parts that compose this small yet powerful application, which uses a common architecture, and with this in mind we can plan and create the needed resources on a given cloud provider using best practices or as AWS calls it, using the “AWS Well Architected Framework”.

Assuming that the entire underlying networking infrastructure is already in place (we will return to this in a few lines) it’s just a matter of moving/refactor/re-engineering/replacing the components of the application into the cloud, which will require the creation of the servers, creation of managed services for the databases and the moving of the data, both from the application and the database. Do not forget about the updates or creation of the CI/CD Pipelines for building, testing and deploying the application.

This was easy enough right? This is true not because the application was small or had just 3 moving parts (although it is helpful), this is because the application did not live on any Cloud Provider and the components of the application were clearly and easy to understand.

The Heavy Lifting

Let’s continue with the example application we mentioned earlier, the heavy lifting of this application living on-premise would be the database itself, and at the end the entire application was refactored to live on Azure, so we ended up with the final application using multiple services such as Azure Key Vault for managing secrets, Azure AD for managing authentication of users, Azure Static Web Apps for having the entire front end site (which was running on a Nginx server), a new Azure Queue Storage for storing large number of messages and an instance on Azure SQL Server (the database was migrated from Postgres).

All this Azure services did not come without a price for the application, it had to be refactored to include new libraries for interacting with Azure services, for authentication and consuming them, and without this services the application itself wouldn’t work as expected; and yes it is a monolithic application. This is where the fun begins, as seen in the following image of some software developers trying to move this non microservice application.

The application ran without any problems for two years, but after several emails with AWS representatives and some cost optimizations requested by the financial department, the company decides to take a big leap and move from Azure into AWS. As you might imagine, this is a different process from the one we explained before and not only because there are now more moving parts, but because now we are talking about Cloud services being used actively on the application logic, and therefore the application and developers will need to learn and apply different libraries and frameworks from the new Cloud Provider.

When migrating workloads to the clouds, one of the most challenging parts is when talking about the data of the application, which can live in different places and assuming the documentation is about right, in most of the cases this process will increase the downtime of the application on “D-Day” (more on this later), but this will fully depend on the application logic and the architecture of how the data is loaded and ingested.

Welcome Infrastructure as Code (IaC)

Infrastructure can now be created and managed by using code, one can create 100 servers with pre baked software using a for loop for manage and tag the servers, and all of this created by a CI/CD server triggered by a single commit on a given repository. With this in mind, all the infrastructure for the new application can be created and managed using IaC tools, such as Terraform or Pulumi, which are cloud agnostic, or cloud vendor’s proprietary solutions as AWS Cloudformation or Azure Resources Manager. Cloud providers are now enabling SDKs for a more developer experience/oriented development with more compatibility and capabilities than a given “Provider” on Terraform or even their main IaC solutions.

Choosing the right IaC tool/product will fully depend on the application logic and the level of automation that is needed (which should be the entire stack), but at the end having a complete pipeline for the Infrastructure should be one of the main goals of having our applications running in the cloud as it allows to have a complete control of our systems. Soon after this, we will end up using GitOps methodologies which will increase our agility to deploy not just our applications but our entire infrastructure.

As soon as you have develop your entire infrastructure on any Iac, you are ready to deploy it “n” times with the exact same precision, without any need of human intervention on any of the configurations that your application needs, in terms of inventory management or infrastructure requirements. Is here on where you will be creating all the environments on where the application will live, which normally tends to be development, staging/UAT and production. Sometimes you will also need other environments for testing, experimenting or even innovating and this will be just as easy as running the scripts for creating the very same infrastructure, over and over again.

Enter Kubernetes

This tail begins on Kubernetes own place of birth, GCP, on where he/it was conceived, and on where some of todays internet’s backbone was also born. Sadly, the application is not yet running as a microservice architecture and therefore Kubernetes has not have the chance to shine on this scenario. I will discuss in another post how Kubernetes is also enabling cloud adoption and helping application to move smother into the cloud, as if used right, is Cloud Agnostic.

D-Day

The so called D-Day is normally referred to the day on which an attack or operation is to be initiated. The best known D-Day was during World War II, on June 6, 1944, the day of the Normandy landings from the Western Allied efforts to liberate western Europe from Nazi Germany. With this in mind, our D-Day will be the day on which the migration is to be initiated and completed, and the termination and decommissioning of the now legacy systems. Although in some cases the old application will not be terminated and will live for at least 6 more months not because is a business requirement bu because someone remember it was there (I have seen this so many times).

As you might imagine, planning an invasion is not an easy task as it requires that a lot of parts complete their individual purposes in a predefined way, so at the end all achievement of this teams (brigades) are the ones that add up to the success of the greater good, a successful invasion and the surrender or termination of the enemy. This very same planning is needed when doing a migration of multiple systems as previously mentioned on the Heavy Lifting section.

All this same terminology can be applied when dealing with a migration, in our case with the migration from Azure to AWS. On D-Day all systems from Azure will be shutdown and the services from AWS will be brought to life. But it you read that previous statement again you will perhaps find that something doesn’t add up and you are right.

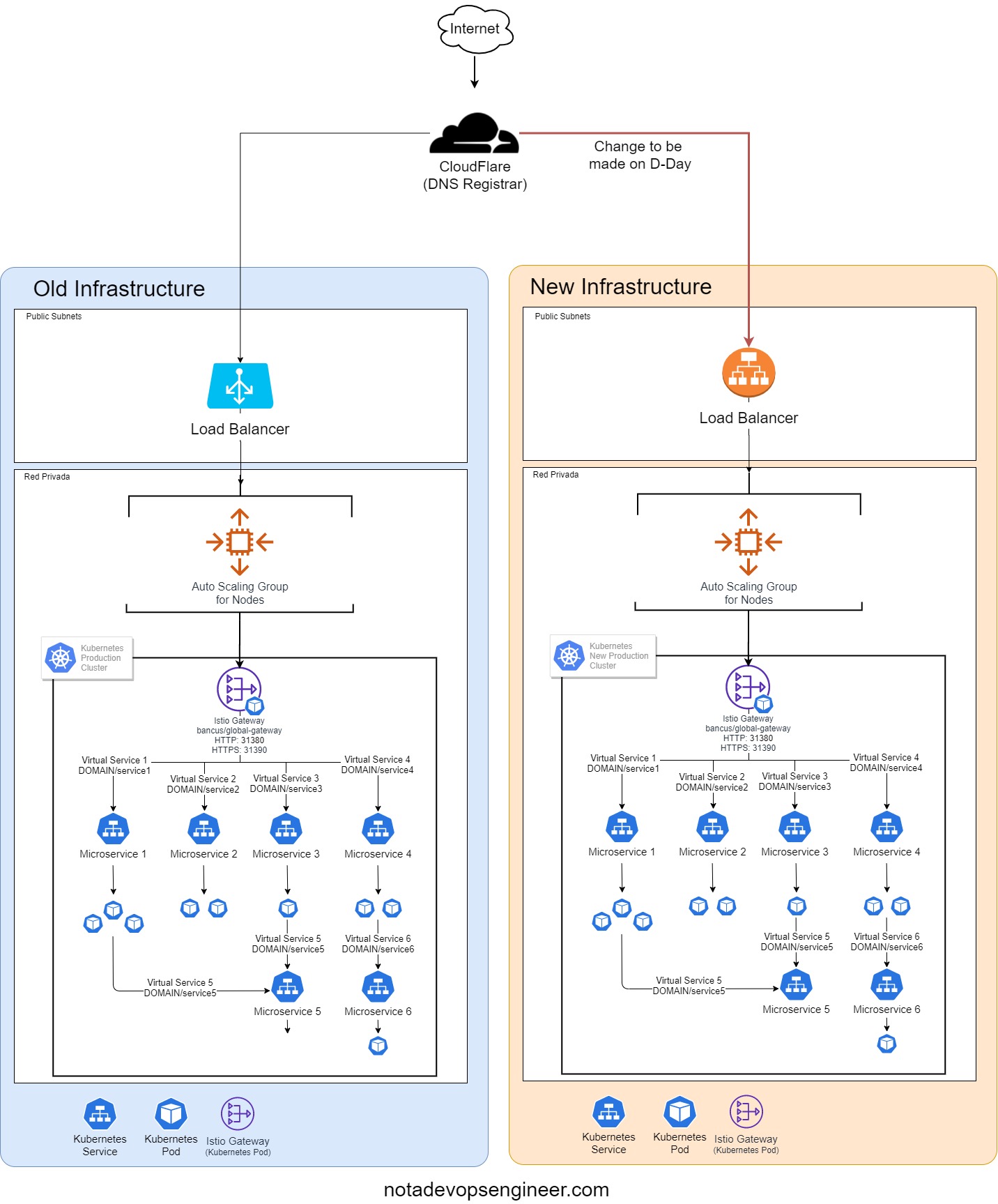

For a successful and smooth transition from one cloud to another, on D-Day we will need to have the entire application stack on the new infrastructure ready to receive traffic or at least with a small time of downtime when the data or any other service is replicated/moved into the new cloud provider. On D-Day, the team in charge of moving the application and doing the heavy lifting should have a fully functional application already running and accepting petitions from the internet, in other words, a production grade environment ready to accept traffic when the DNS records are updated to point from the old to the new cloud provider. Please refer to the following diagram.

Sadly, we lost the genuine reference for an actual D-Day as in a real one we would be doing all the heavy lifting of creating and deploying the application and infrastructure when the application is shutdown, and as in a real combat, everything could go wrong and at the end we could even lost the battle which will be our engineering team dying while trying to deploy the application when migrating the data into the new Database Managed service. That was a little rough, but I hope you get the idea of why a D-Day per se should never be attempted when doing a cloud migration.

Instead, all planning and heavy lifting should be completed before D-Day, it’s not cheating, its basically doing the homework on time and working smarter not harder. On this operation, a detailed plan of all action items should be made, with the engineers in charge of them, an ETA, as well as a brief description and comments on each, so at the end the entire team will have a journal for a better understanding of all the needed parts and steps needed for a successfully invasion on the new cloud provider.

The only things that should be happening on D-Day, that can’t happen any other day, are normally: the migration or replication of data from the old systems into the new ones, the update or creation of the DNS records for the new infrastructure and the most important thing, the documentation of all the processes that were completed during the invasion. Of course, this has been the case on mostly all the operations that I have conducted but rest assured that there are other strategies when trying to conquer a new cloud.

Final Thoughts

Hooray! You made it to the end, it has been quite a journey, but by know you have a better glimpse of how migrating workloads into the cloud happen and are conducted, of course in a 10,000 feet overview. Cloud computing typically only delivers value to the extent that an organization commits to it. Moving a few apps to the cloud may not lead to much return of investment, but a large-scale transition can lead to considerable value creation.

A Cloud Journey requires patience and dedication on everyone’s part. While strong leadership and DevOps methodologies are needed to succeed, there are technologies, processes and people involved, all of which need to be addressed and at the end have the same main goal in mind, conquer new territories.

Build On!