10 min to read

The rise of Managed Kubernetes

The rise of Kubernetes as a Services (KaaS).

In the image, Marvel character Galactus, who is well known by devouring entire planets and then injecting his own type of virus through YAML files into different civilizations that run over separate cloud providers.

A market introduction

It goes without saying that Kubernetes has become the de facto container orchestrator and it’s evolving in a way that people are starting to call it the operating system of the cloud (some say this is already the case), and not just for the cloud but also for on premise/data centers environments.

Over the last couple of years a race has been going on and has involved all major cloud providers, including AWS, Azure, GCP, IBM and even DigitalOcean, and the main goal for all of them: Run Kubernetes workloads into their own Managed Kubernetes service. The winner is yet to be declared and the race itself is tight and fully dominated by the big players in the market.

While most managed Kubernetes services have been around for fewer than three years, one offering was well ahead of this. Given that Kubernetes was originally developed at Google, it’s not a surprise that Google Kubernetes Engine (GKE) is far away from its competitors by at least three years, being released in 2015. Its largest competitors, AKS and EKS, both launched in 2018, thanks to this, GKE has a great head-start that is still noticeable today in the platform’s maturity and all the features that are supported within the GCP Ecosystem.

Welcome KaaS

As the Author of the fantastic book “Cloud Native with Kubernetes” said in one of the chapters, “The smartest thing to do when running Kubernetes workloads is to pay an extra amount of money and let the smartest people handle and manage the entire complexity of Kubernetes”. Well, that’s not precisely what he said, but in a way it was. What the author was trying to say was that implementing Kubernetes is tough, trust me on this one, and not just the implementation part but also the maintenance and achieving stability and reliability of all the running components as well as the applications running in the different types of Kubernetes objets (Deployments, DeamonSets, etc).

Ok but, what is Kubernetes as a Service? It is a method to manage the infrastructure, networking, storage and even updates of all the Kubernetes systems, to ensure rapid delivery, scalability, and accessibility of it. In the simplest terms, it’s an evolution of Kubernetes technology that ensures easy deployment, optimized operations, and “infinite” scalability of Kubernetes, out of the box.

Benefits

As a Certified Kubernetes Administrator (CKA) I’ve had the opportunity to work on multiple setups of Kubernetes dealing with different flavors and installations/setups, from local Raspberry Pis, On premises servers, multi cloud federated clusters and multi region cluster; let me tell you, there are a lot of benefits of using KaaS but as for my experience this ones are my “favorites”:

1. Security

Deployment of the Kubernetes cluster can be easy once we understand the service delivery ecosystem and data centre configuration. But this can lead to open tunnels for external malicious attacks. With KaaS, we can have policy-based user management so that users of infrastructure get proper permission to access the environment based on their business needs. Normal Kubernetes implementation on the cloud exposes the API servers to the internet, inviting attackers to break into servers. With KaaS, some vendors enable the best security options to hide the Kubernetes API server and restrict the actual access to it.

2. Elasticity (Scaling of infrastructure)

With KaaS in place, IT infrastructure can scale rapidly. It is possible due to high-level automation provided with KaaS. This saves a lot of time and bandwidth of the admin team (DevOps teams). Autoscaling in the cloud is one of the best things that ever happen to the IT world!

3. Serverless

Major cloud providers are now moving towards Serverless services and Kubernetes hasn’t kept behind, in a nutshell, it’s the ability to run serverless compute for containers. It removes the need to provision and manage servers, in Kubernetes lingo, Worker Nodes. It lets you specify and pay for resources per application, and improves security through application isolation by design.

4. Increased operational efficiency

For me, the most important one, having the entire Kubernetes Operative system managed, updated, and provisioned by the cloud provider. You, as the DevOps engineer, or the person in charge of the Kubernetes clusters, can now rely on built-in automated provisioning, repair, monitoring, and scaling that at the end of the day minimizes infrastructure maintenance of all sorts of types.

The Sellers

As previously mentioned, there are already some sellers well established in the KaaS market, and they are all about competition when it comes to choosing their own Kubernetes managed service.

Amazon Elastic Kubernetes Service (EKS)

Is a managed Kubernetes offering from AWS. It is based on the key building blocks of AWS such as Amazon Elastic Compute Cloud (EC2), Amazon EBS, Amazon Virtual Private Cloud (VPC) and Identity Access Management (IAM). AWS also has an integrated container registry in the form of Amazon Elastic Container Registry (ECR), which provides secure, low latency access to container images.

Azure Kubernetes Service (AKS)

Is a managed container management platform available in Microsoft Azure. AKS is built on top of Azure VMs, Azure Storage, Virtual Networking and Azure Monitoring. Azure Container Registry (ACR) may be provisioned in the same resource group as the AKS cluster for private access to container images.

Google Kubernetes Engine (GKE)

Takes advantage of Google Cloud Platform’s core services, such as Compute Engine, Persistent Disks, VPC and Stackdriver. Google has made Kubernetes available in on-premises environments and other public cloud platforms through the Anthos service. Anthos is a control plane that runs in GCP, but manages the life cycle of clusters launched in hybrid and multicloud environments. Google extended GKE with a managed Istio service for service mesh capabilities. It also offers Cloud Run, a serverless platform — based on the Knative open source project — to run containers without launching clusters .

IBM Cloud Kubernetes Service (IKS)

Is a managed offering to create Kubernetes clusters of computer hosts to deploy and manage containerized applications on IBM Cloud. As a certified provider, IKS provides intelligent scheduling; self-healing; horizontal scaling; service discovery and load balancing; automated rollouts and rollbacks; and secret and configuration management for modern applications. IBM is one of the few cloud providers to offer a managed Kubernetes service on bare metal. Via its acquisition of Red Hat, IBM offers a choice of community Kubernetes or OpenShift clusters available through IKS.

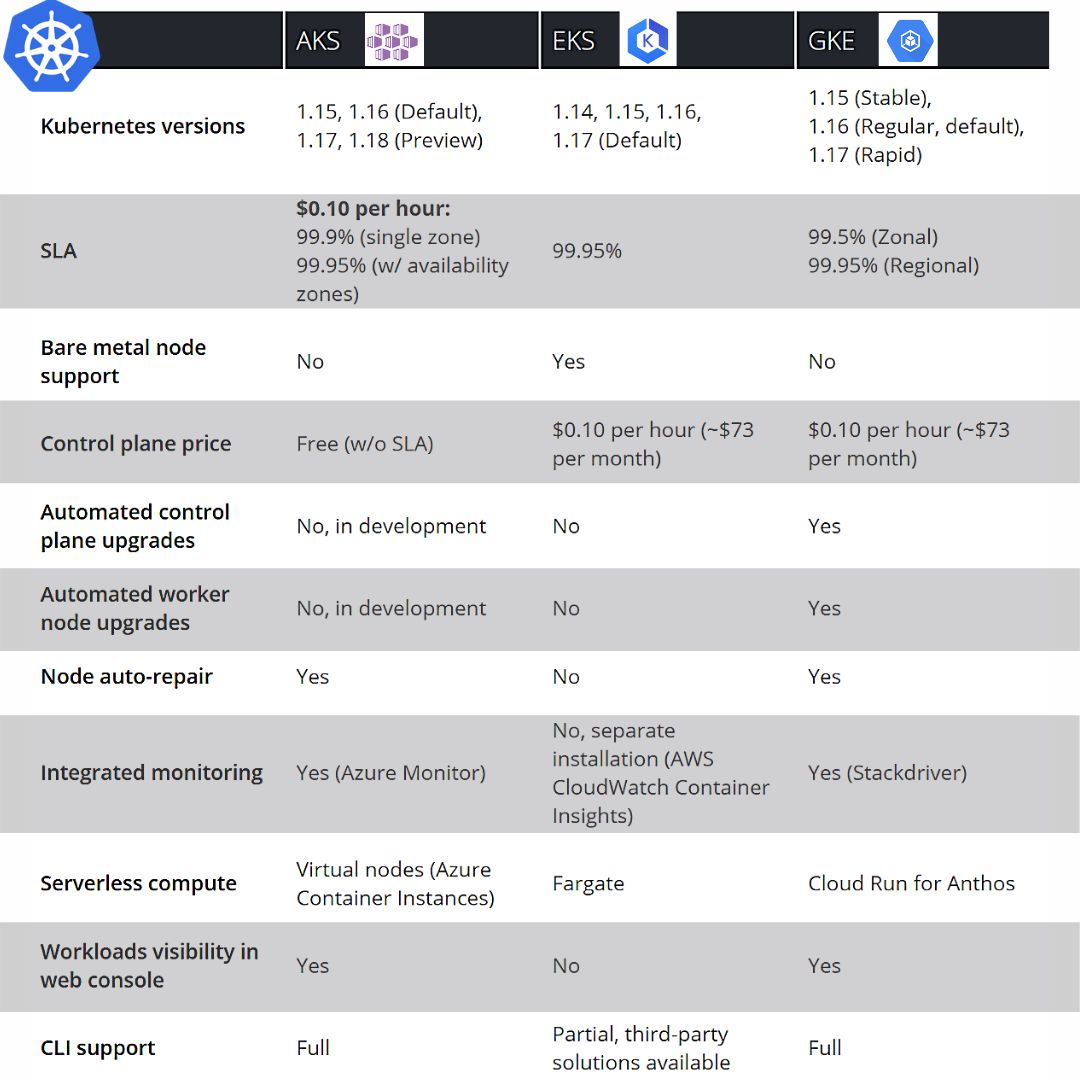

I wanted to take the time to make a table comparing all this KaaS but at the end I remembered the sentence I made earlier about the smart people doing the job/services and us as the final consumers consuming it, I applied this and here is an image shared by someone in my LinkedIn feed called Pavan Belagatti.

A lot of important information is shared in that table, one of the most important ones is the price for having a Cloud Provider manage your Kubernetes components. Is interesting to see that both AWS and GCP have the same price, and AKS is actually free, at least at the time of this writing. Another important topic is the integrated monitoring, on where AWS does not have an out of the box monitoring/logging solution for EKS, instead, one has to install cloudwatch custom metrics and then pay the price for them, whereas GKE and AKS have already a pretty decent solution to do this.

Serverless compute is another great future that is being implemented when running and configuring kubernetes workloads, I had the chance to play around (on production workloads) with Fargates Profiles on EKS, and it’s really an important feature and an revolution itself on how you run your pods in a serverless way. I will explore this in future posts.

The Buyers

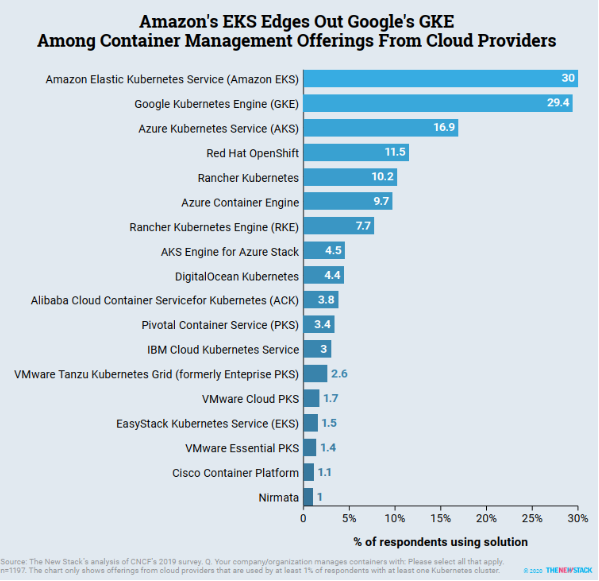

As you would expect after all the previous information, there is not just a winner in this KaaS market, as most of all the offerings are vendor locked with their specific cloud provider’s services and infrastructure. But talking about real data, in “The State of the Kubernetes Ecosystem Second Edition” released in mids 2020 by the NewStack, they conducted a survey and there was an specific section on where they talked about KaaS, and it came as a surprise when the final data showed that EKS was now ahead of the GKE, who was the preferred one for the last couple of years.

Adoption of KaaS is real, and people are now moving towards that for all the previously mentioned benefits. AWS is now leading but not by far over his main competitor, GKE. Azure service is also getting a lot of attention for all the integrations with existing Azure services.

The End

KaaS is best suitable for pretty much every organization, no matter the size nor the project, it should be the preferred platform to run containerized workloads in any cloud. Adding to the efficiency of Kubernetes clusters, KaaS brings an overall improvement in the entire container infrastructure and ecosystem, allowing DevOps, SRE, Developers, Operations and Security Team to focus on other application/business matters and not Kubernetes maintenance itself.

Use Managed Kubernetes if You Can.

With the run less software principles in mind, I would highly recommend that the management of your Kubernetes Clusters operations should be outsourced to any of the cloud providers previously exposed. Installing, maintaining, securing, configuring, upgrading and making your Kubernetes Cluster reliable is a heavy lifting of a lot of things, so it makes sense for almost all companies not to do it themselves as it is something that doesn’t differentiate your business (95% of the time).

You should use managed Kubernetes if you can, it is the best option for most of the requirements needed in terms of cost, overhead and quality. If at the end you do self-host your clusters, don’t underestimate the engineering time involved for both the initial setup and ongoing maintenance and support overhead.

Reference

Please take some time to go over the mayor cloud providers KaaS websites: